Technology has evolved at a rapid rate, and that point was perfectly demonstrated throughout the last decade.

The 2010s featured a plethora of innovations that radically changed the landscape in computing, so read on as we recap some of the most important developments.

Quantum Computing

Sites such as ComputingNews.com are always eager to report on the ‘next big thing’ in computing and 2019 may well have delivered just that.

Quantum computers have long been viewed as the holy grail in the industry, and there were numerous breakthroughs during the year.

IBM announced its first commercial quantum computer for use outside of the lab at CES, while Google claimed it was on the verge of ‘quantum supremacy’.

Some experts say that 2019 was the best year for quantum physics since Albert Einstein started thinking about relativity, highlighting the magnitude of the progress made.

iPad

It may come as some surprise to discover that a product which has become a part of millions of households across the world was not launched until 2010.

Apple’s creation of a device that sat between a smartphone and a laptop was initially seen as a luxury that was unnecessary in an already saturated tech market.

However, it proved to be an inspired addition to the ranks, sparking numerous other manufacturers into releasing their own tablets.

It is estimated that more than 500 million iPads have been sold since the initial launch, a figure that demonstrates the impact it has had.

Microsoft Azure

The concept of cloud-computing had been around for a little while, but it wasn’t until the 2010s came around that it truly took off.

Azure is a cloud service created by Microsoft for building, testing, deploying and managing applications and services through its data centres.

It is the biggest competition to Amazon Web Services’ platform which was launched in 2006, and is now the core infrastructure underlying the modern internet and all our digital experiences.

Azure’s most notable success against AWS came towards the end of the decade, when it was chosen by the Pentagon to modernise the US military’s cloud infrastructure.

Adobe Creative Cloud

First launched in 2011, Adobe Creative Cloud is a set of applications and services used in graphic design, video editing, web development and photography.

In Creative Cloud, a monthly or annual subscription service is delivered over the internet to be installed directly on a local computer.

Adobe’s decision to switch the service to the cloud laid the foundation for many other software companies to follow suit afterwards.

Creative Cloud was initially hosted on Amazon Web Services, but a new agreement saw the software switch to Microsoft Azure in 2017.

Chromebooks

Chromebooks created a new market of cheaper laptops for users needing basic computing and internet services.

Acer and Samsung were the first two companies to launch Chromebooks in 2011 and the marketplace is now awash with similar machines.

Chromebooks are primarily used to perform tasks using the Google Chrome browser, with most applications and data residing in the cloud rather than on the machine itself.

They tend to be particularly popular in the education sector, where they are viewed as a more robust alternative to tablets.

Nvidia G-Sync

Screen stuttering was previously the bane of gamers across the world, which is why the arrival of the Nvidia G-Sync chip is seen as such a landmark moment in computing.

First used in laptops, G-Sync now features in many other devices, using adaptive frame rate technology to make sure the screen never tears when you are gaming.

It works by allowing a video display’s refresh rate to adapt to the frame rate of the outputting device rather than the outputting device adapting to the display.

AMD subsequently released a similar technology for displays called FreeSync, which serves the same function as G-Sync but is free-to-use.

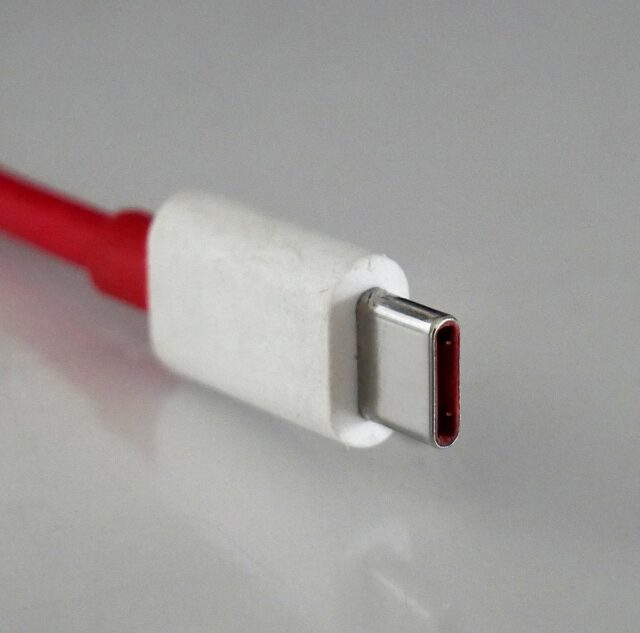

USB-C

First launched in 2014, USB-C is an industry-standard connector for both data and power using a single cable.

Type-C ports are now found on wide range of devices, including laptops, external hard drives, smartphones and tablets.

The USB-C connector looks similar to a micro USB connector, although it is differentiated by its oval shape and ability to be ‘flipped’.

One of USB-C’s most useful skills is sending simultaneous video signals and power, allowing you to connect different devices through one port if you have the correct adaptor.

Windows 10

It is fair to say that Microsoft has had a chequered history with its Windows operating system, particularly where versions 8 and 8.1 were concerned.

However, the company steadied the ship in style with Windows 10, an OS that has proved to be reasonably future-proof thus far.

Windows 10 was well received by the tech industry, with critics praising Microsoft’s decision to run with a desktop-oriented interface.

By January 2018, Windows 10 surpassed Windows 7 as the most popular ever version of Windows worldwide.

AMD Ryzen

The launch of Ryzen marked a return to form for AMD, firing them to the forefront of the high-end CPU market after being dominated by Intel over the preceding years.

AMD’s CPUs had fallen progressively behind those from Intel in both single and multi-core performance, but their new product brought them back with a bang.

The company’s mainstream Ryzen chips are split across three families, with their top-end processor blowing Intel’s equivalent out of the water from a price perspective.

Many gamers now favour Ryzen over Intel, with their outrageous price-to-performance value making them a hugely desirable option.

2010s Computing Advances – The Final Word

History will show that the 2010s will be viewed as one of the most innovative decades for the computing industry.

Given their current popularity, it seems unthinkable that iPads did not exist before 2010, yet they are now commonplace in households across the world.

With experts continuing to push the boundaries, it will certainly be interesting to see how the computing landscape looks in another ten years’ time.